1,000+ IT leaders have weighed in. See what they are saying about AI agents in the 2026 Connectivity Benchmark Report.

Learn more.Reactive programming

New foundations for high scalability in Mule 4

✓ Trust is our #1 value, so rest assured your email is safe. Learn more about the use of personal data in our Privacy Policy.

Mule 4 addresses vertical scalability with a radically different design, using reactive programming at its core. A single instance must utilize to the maximum underlying processing and storage resources as it handles traffic. Its ability to handle more traffic with more underlying resources is what we refer to as vertical scalability.

Download this whitepaper to learn:

- How reactive programming maximizes throughput through greater concurrency.

- How thread management is centralized, automated, and simplified for Mule application development.

- How repeatable concurrent streams work across all event processors.

The following is an excerpt from our whitepaper. Fill in the form to download the full version.

Introduction

In this whitepaper you will learn the reactive approach and non-blocking code execution, and an innovative approach to thread management and streaming capabilities, all in the context of Mule 4. You will also learn how to extend Mule 4 with your own modules in a way that exploits these exciting new capabilities. Finally, you will see the underlying reactive Spring Reactor framework used at the core of Mule 4 and how to use it in your extensions

What is Mule 4

Mule 4 is the newest version of Mule runtime engine which uses reactive programming to greatly enhance scalability. A Mule application is an integration application which incorporates areas of logic which are essential to integration.

A Mule application is developed declaratively as a set of flows. Each flow is a chain of event processors. An event processor processes data passing through the flow with logic from one of the said domains. Mule 4 uses reactive programming to facilitate non-blocking execution of the event processors. This has a significant impact on a Mule application’s ability to scale the amount of events it can handle concurrently. Each event processor belongs to a module. Modules are added to Mule runtime engine as required by Mule applications. Mule 4 is entirely extensible through custom modules. The Mule SDK, a new addition to the Mule 4 ecosystem, enables you to extend Mule with new modules. Custom modules benefit from the same scalable reactive capabilities of Mule runtime engine.

Mule 4 addresses vertical scalability with a radically different design using reactive programming at its core. With the aim to maximize throughput our engineers have focused their efforts on achieving higher concurrency through non-blocking operations and a much more efficient use of CPU, memory, and disk space

Reactive programming

The advent of single page web apps and native mobile apps has fueled users’ appetite for fast and responsive applications. We want data to be made available now. We have become accustomed to push notifications. Architectures in the back-end have evolved to cater to front-end responsiveness and drive elasticity and resiliency through event driven microservices.

Reactive Programming (Rx) arose in the .Net community to give engineers the tools and frameworks needed to cater to this appetite. It quickly spread to other languages. It became JavaRx in the Java world and Spring has fully embraced it with Project Reactor. Reactor now lies at the heart of Mule 4’s internal architecture.

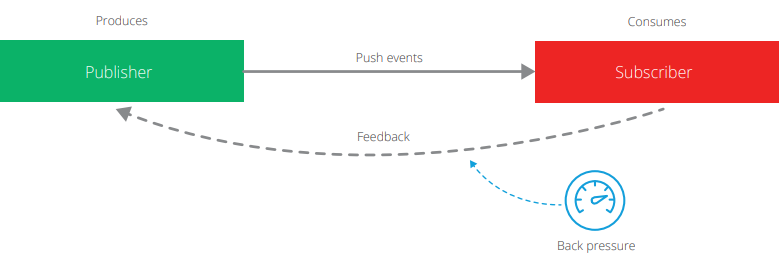

Reactive merges the best from the Iterator and Observer design patterns, and functional programming. The Iterator pattern gives the consumer the power and control over when to consume data. The Observer pattern is the opposite: it gives the publisher power over when to push data. Reactive combines the two to get the beauty of data being pushed to the subscriber when it is available but in a way that protects the subscriber from being overwhelmed. The functional aspect is declarative. You can compare functional composition of operations to the declarative power of SQL. The difference is that you query and filter data in real time against the stream of events as they arrive. This approach of chaining operators together avoids the callback hell which has emerged in asynchronous programming and which makes such code hard to write and maintain.

Reactive programming in Mule 4

Consider Mule flow to be a chain of (mostly) non-blocking publisher / subscriber pairs. The source is the publisher to the flow and also subscriber to the flow as it must receive the event published by the last event processor on the flow.

The HTTP listener, for example, publishes an event and subscribes to the event produced by the flow. Each event processor subscribes and processes in a non-blocking way.

Non-blocking operations in Mule 4

Non-blocking operations have become the norm in Mule 4 and are fundamental to the reactive paradigm. The wisdom here is that threads which sit around waiting for an operation to complete are wasted resources. On the flip side thread switching can be disadvantageous. It is not ideal that each event processor in a Mule application flow executes on a different thread as this will increase the latency of the flow execution. However the advantage is that a Mule flow can cater to greater concurrency because we keep threads busy with necessary tasks.

Thread management and auto-tuning in Mule 4

Mule 4 eradicates the need for manual thread pool configuration as this is done automatically by the Mule runtime.

- Centralized thread pools - Thread pools are no longer configurable at the level of a Mule application. We now have three centralized pools: PU_INTENSIVE, CPU_LITE and BLOCKING_IO. All three are managed by the Mule runtime and shared across all applications deployed to that runtime.

- HHTTP thread pools - The Mule 4 HTTP module uses Grizzly under the covers. Grizzly needs selector thread pools configured. Java NIO has the concept of selector threads. These check the state of NIO channels and create and dispatch events when they arrive. The HTTP Listener selectors poll for request events only. The HTTP Requester selectors poll for response events only.

- Thread pool responsibilities - The source of the flow and each event processor must execute in a thread that is taken from one of the three centralized thread pools (with the exception of the selector threads needed by HTTP Listener and Requester). The task accomplished by an event processor is either 100% nonblocking, partially blocking, or mostly blocking.

- Thread pool sizing - The minimum size of the three thread pools is determined when the Mule runtime starts up.

- Thread pool scheduler assignment criteria- In Java the responsibility of managing thread pools falls on the Scheduler. It pulls threads from the pool and returns them and adds new threads to the pool.

Learn more from our Whitepaper: “Reactive programming: New foundations for high scalability in Mule 4”

- Section 1: Introduction

- Section 2: What is Mule 4?

- Section 3: Reactive programming

- Section 4: Spring reactor flux

- Section 5: Reactive programming in Mule 4

- Section 6: Back pressure

- Section 7: Non-blocking operations in Mule 4

- Section 8: Thread management and auto-tuning in Mule4

- Section 9: Streaming in Mule 4

- Section 10: Scalability features in Mule 4 SDK

Fill in the form to download the full version of our whitepaper.